Detmar STRAUB, David GEFEN, and Jan RECKER

Shortcut to Sections

- Section 1: Welcome and Disclaimers

- Section 2: What is Quantitative, Positivist Research

- Section 3: Philosophical Foundations

- Section 4: Fundamentals of QtPR

- Section 5: The General QtPR Research Approach

- Section 6: Practical Tips for Writing QtPR Papers

- Section 7: Glossary

- Section 8: Bibliography

Section 1. Welcome and Disclaimers

Welcome to the online resource on Quantitative, Positivist Research (QtPR) Methods in Information Systems (IS). This resource seeks to address the needs of quantitative, positivist researchers in IS research – in particular those just beginning to learn to use these methods. IS research is a field that is primarily concerned with socio-technical systems comprising individuals and collectives that deploy digital information and communication technology for tasks in business, private, or social settings. We are ourselves IS researchers but this does not mean that the advice is not useful to researchers in other fields.

This webpage is a continuation and extension of an earlier online resource on Quantitative Positivist Research that was originally created and maintained by Detmar STRAUB, David GEFEN, and Marie BOUDREAU. As the original online resource hosted at Georgia State University is no longer available, this online resource republishes the original material plus updates and additions to make what is hoped to be valuable information accessible to IS scholars. Given that the last update of that resource was 2004, we also felt it prudent to update the guidelines and information to the best of our knowledge and abilities. If readers are interested in the original version, they can refer to a book chapter (Straub et al., 2005) that contains much of the original material.

1.1 Objective of this Website

This resource is dedicated to exploring issues in the use of quantitative, positivist research methods in Information Systems (IS). We intend to provide basic information about the methods and techniques associated with QtPR and to offer the visitor references to other useful resources and to seminal works.

1.2 Feedback

Suggestions on how best to improve on the site are very welcome. Please contact us directly if you wish to make suggestions on how to improve the site. No faults in content or design should be attributed to any persons other than ourselves since we made all relevant decisions on these matters. You can contact the co-editors at: straubdetmar@gmail.com, gefend@drexel.edu, and jan.christof.recker@uni-hamburg.de.

1.3 How to Navigate this Resource

This resource is structured into eight sections. You can scroll down or else simply click above on the shortcuts to the sections that you wish to explore next.

1.4 Explanation for Self-Citations

One of the main reasons we were interested in maintaining this online resource is that we have already published a number of articles and books on the subject. We felt that we needed to cite our own works as readily as others to give readers as much information as possible at their fingertips.

1.5 What This Resource Does Not Cover

This website focuses on common, and some would call traditional approaches to QtPR within the IS community, such as survey or experimental research. There are many other types of quantitative research that we only gloss over here, and there are many alternative ways to analyze quantitative data beyond the approaches discussed here. This is not to suggest in any way that these methods, approaches, and tools are not invaluable to an IS researcher. Only that we focus here on those genres that have traditionally been quite common in our field and that we as editors of this resource feel comfortable in writing about.

One such example of a research method that is not covered in any detail here would be meta-analysis. Meta-analyses are extremely useful to scholars in well-established research streams because they can highlight what is fairly well known in a stream, what appears not to be well supported, and what needs to be further explored. Importantly, they can also serve to change directions in a field. There are numerous excellent works on this topic, including the book by Hedges and Olkin (1985), which still stands as a good starter text, especially for theoretical development.

1.6 How to Cite this Resource

You can cite this online resource as:

Straub, D. W., Gefen, D., Recker, J., “Quantitative Research in Information Systems,” Association for Information Systems (AISWorld) Section on IS Research, Methods, and Theories, last updated March 25, 2022, http://www.janrecker.com/quantitative-research-in-information-systems/.

The original online resource that was previously maintained by Detmar Straub, David Gefen, and Marie-Claude Boudreau remains citable as a book chapter: Straub, D.W., Gefen, D., & Boudreau, M-C. (2005). Quantitative Research. In D. Avison & J. Pries-Heje (Eds.), Research in Information Systems: A Handbook for Research Supervisors and Their Students (pp. 221-238). Elsevier.

Section 2: What is Quantitative, Positivist Research (QtPR)

2.1 Cornerstones of Quantitative, Positivist Research

QtPR is a set of methods and techniques that allows IS researchers to answer research questions about the interaction of humans and digital information and communication technologies within the sociotechnical systems of which they are comprised. There are two cornerstones in this approach to research.

The first cornerstone is an emphasis on quantitative data. QtPR describes a set of techniques to answer research questions with an emphasis on state-of-the-art analysis of quantitative data, that is, types of data whose value is measured in the form of numbers, with a unique numerical value associated with each data set. As the name suggests, quantitative methods tend to specialize in “quantities,” in the sense that numbers are used to represent values and levels of measured variables that are themselves intended to approximate theoretical constructs. Often, the presence of numeric data is so dominant in quantitative methods that people assume that advanced statistical tools, techniques, and packages to be an essential element of quantitative methods. While this is often true, quantitative methods do not necessarily involve statistical examination of numbers. Simply put, QtPR focus on how you can do research with an emphasis on quantitative data collected as scientific evidence. Sources of data are of less concern in identifying an approach as being QtPR than the fact that numbers about empirical observations lie at the core of the scientific evidence assembled. A QtPR researcher may, for example, use archival data, gather structured questionnaires, code interviews and web posts, or collect transactional data from electronic systems. In any case, the researcher is motivated by the numerical outputs and how to imbue them with meaning.

The second cornerstone is an emphasis on (post-) positivist philosophy. As will be explained in Section 3 below, it should be noted that “quantitative, positivist research” is really just shorthand for “quantitative, post-positivist research.” Without delving into many details at this point, positivist researchers generally assume that reality is objectively given, that it is independent of the observer (researcher) and their instruments, and that it can be discovered by a researcher and described by measurable properties. Interpretive researchers, on the other hand, start out with the assumption that access to reality (given or socially constructed) is only through social constructions such as language, consciousness, and shared meanings. While these views do clearly differ, researchers in both traditions also agree on several counts. For example, both positivist and interpretive researchers agree that theoretical constructs, or important notions such as causality, are social constructions (e.g., responses to a survey instrument).

2.2 Quantitative, Positivist Research for Theory-Generation versus Theory-Evaluation

What are theories? There is a vast literature discussing this question and we will not embark on any kind of exegesis on this topic. A repository of theories that have been used in information systems and many other social science theories can be found at: https://guides.lib.byu.edu/c.php?g=216417&p=1686139.

In simple terms, in QtPR it is often useful to understand theory as a lawlike statement that attributes causality to sets of variables, although other conceptions of theory do exist and are used in QtPR and other types of research (Gregor, 2006). One common working definition that is often used in QtPR research refers to theory as saying “what is, how, why, when, where, and what will be. [It provides] predictions and has both testable propositions and causal explanations (Gregor, 2006, p. 620).”

QtPR can be used both to generate new theory as well as to evaluate theory proposed elsewhere. In theory-generating research, QtPR researchers typically identify constructs, build operationalizations of these constructs through measurement variables, and then articulate relationships among the identified constructs (Im & Wang, 2007). In theory-evaluating research, QtPR researchers typically use collected data to test the relationships between constructs by estimating model parameters with a view to maintain good fit of the theory to the collected data.

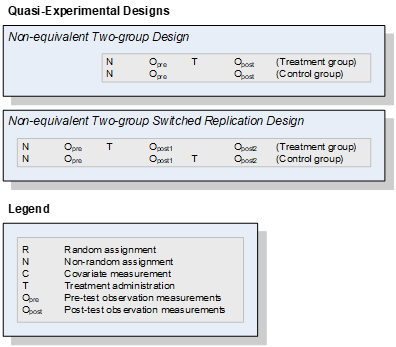

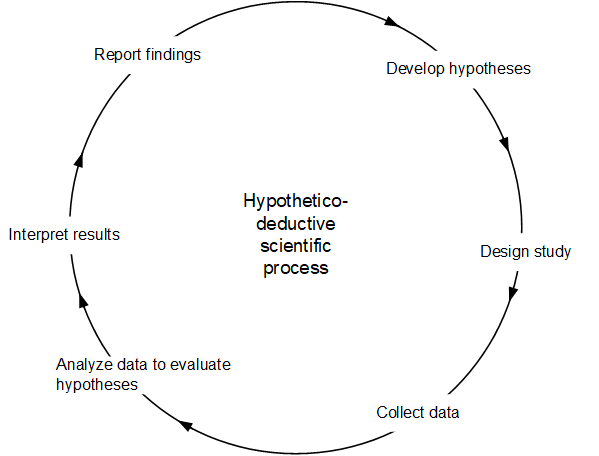

Traditionally, QtPR has been dominant in this second genre, theory-evaluation, although there are many applications of QtPR for theory-generation as well (e.g., Im & Wang, 2007; Evermann & Tate, 2011). Historically however, QtPR has by and large followed a particular approach to scientific inquiry, called the hypothetico-deductive model of science (Figure 1).

This model suggests that the underlying view that leads a scholar to conclude that QtPR can produce knowledge is that the world has an objective reality that can be captured and translated into models that imply testable hypotheses, usually in the form of statistical or other numerical analyses. In turns, a scientific theory is one that can be falsified through careful evaluation against a set of collected data.

The original inspiration for this approach to science came from the scientific epistemology of logical positivism during the 1920s and 1930s as developed by the Vienna Circle of Positivists, primarily Karl Popper,. This “pure” positivist attempt at viewing scientific exploration as a search for the Truth has been replaced in recent years with the recognition that ultimately all measurement is based on theory and hence capturing a truly “objective” observation is impossible (Coombs, 1976). Even the measurement of a purely physical attribute, such as temperature, depends on the theory of how materials expand in heat. Hence interpreting the readings of a thermometer cannot be regarded as a pure observation but itself as an instantiation of theory.

As suggested in Figure 1, at the heart of QtPR in this approach to theory-evaluation is the concept of deduction. Deduction is a form of logical reasoning that involves deriving arguments as logical consequences of a set of more general premises. It involves deducing a conclusion from a general premise (i.e., a known theory), to a specific instance (i.e., an observation). There are three main steps in deduction (Levallet et al. 2021):

- Testing internal consistency, i.e., verifying that there are no internal contradictions.

- Distinguishing between the logical basics of the theory and its empirical, testable, predictions.

- Empirical testing aimed at falsifying the theory with data. When the data do not contradict the hypothesized predictions of the theory, it is temporarily corroborated. The objective of this test is to falsify, not to verify, the predictions of the theory. Verifications can be found for almost any theory if one can pick and choose what to look at.

Whereas seeking to falsify theories is the idealistic and historical norm, in practice many scholars in IS and other social sciences are, in practice, seeking confirmation of their carefully argued theoretical models (Gray & Cooper, 2010; Burton-Jones et al., 2017). For example, QtPR scholars often specify what is called an alternative hypothesis rather than the null hypothesis (an expectation of no effect), that is, they typically formulate the expectation of a directional, signed effect of one variable on another. Doings so confers some analytical benefits (such as using a one-tailed statistical test rather than a two-tailed test), but the most likely reason for doing this is that confirmation, rather than disconfirmation of theories is a more common way of conducting QtPR in modern social sciences (Edwards & Berry, 2010; Mertens & Recker, 2020). In Popper’s falsification view, for example, one instance of disconfirmation disproves an entire theory, which is an extremely stringent standard. More information about the current state-of the-art follows later in section 3.2 below, which discusses Lakatos’ contributions to the philosophy of science.

In conclusion, recall that saying that QtPR tends to see the world as having an objective reality is not equivalent to saying that QtPR assumes that constructs and measures of these constructs are being or have been perfected over the years. In fact, Cook and Campbell (1979) make the point repeatedly that QtPR will always fall short of the mark of perfect representation. For this reason, they argue for a “critical-realist” perspective, positing that “causal relationships cannot be perceived with total accuracy by our imperfect sensory and intellective capacities” (p. 29). This is why we argue in more detail in Section 3 below that modern QtPR scientists have really adopted a post-positivist perspective.

2.3 What QtPR is Not

QtPR is not math analytical modeling, which typically depends on mathematical derivations and assumptions, sans data. This difference stresses that empirical data gathering or data exploration is an integral part of QtPR, as is the positivist philosophy that deals with problem-solving and the testing of the theories derived to test these understandings.

QtPR is also not design research, in which innovative IS artifacts are designed and evaluated as contributions to scientific knowledge. Models and prototypes are frequently the products of design research. In QtPR, models are also produced but most often causal models whereas design research stresses ontological models. Also, QtPR typically validates its findings through testing against empirical data whereas design research can also find acceptable validation of a new design through mathematical proofs of concept or through algorithmic analyses alone. Still, it should be noted that design researchers are increasingly using QtPR methods, specifically experimentation, to validate their models and prototypes so QtPR is also becoming a key tool in the arsenal of design science researchers.

QtPR is also not qualitative positivist research (QlPR) nor qualitative interpretive research. More information about qualitative research in both variants is available on an AIS-sponsored online resource. The simplest distinction between the two is that quantitative research focuses on numbers, and qualitative research focuses on text, most importantly text that captures records of what people have said, done, believed, or experienced about a particular phenomenon, topic, or event. Qualitative research emphasizes understanding of phenomena through direct observation, communication with participants, or analyses of texts, and at times stress contextual subjective accuracy over generality. What matters here is that qualitative research can be positivist (e.g., Yin, 2009; Clark, 1972; Glaser & Strauss, 1967) or interpretive (e.g., Walsham, 1995; Elden & Chisholm, 1993; Gasson, 2004). Without delving too deeply into the distinctions and their implications, one difference is that qualitative positive researchers generally assume that reality can be discovered to some extent by a researcher as well as described by measurable properties (which are social constructions) that are independent of the observer (researcher) and created instruments and instrumentation. Qualitative interpretive researchers start out with the assumption that access to reality (given or socially constructed) is only through social constructions such as language, consciousness, and shared meanings. Interpretive researchers generally attempt to understand phenomena through the meanings that people assign to them.

These nuances impact how quantitative or qualitative researchers conceive and use data, they impact how researchers analyze that data, and they impact the argumentation and rhetorical style of the research (Sarker et al., 2018). It does not imply that certain types of data (e.g., numerical data) is reserved for only one of the traditions. For example, QlPR scholars might interpret some quantitative data as do QtPR scholars. However, the analyses are typically different: QlPR might also use statistical techniques to analyze the data collected, but these would typically be descriptive statistics, t-tests of differences, or bivariate correlations, for example. More advanced statistical techniques are usually not favored, although of course, doing so is entirely possible (e.g., Gefen & Larsen, 2017).

Section 3. Philosophical Foundations

In what follows, we discuss at some length what have historically been the views about the philosophical foundations of science in general and QtPR in particular. We note that these are our own, short-handed descriptions of views that have been, and continue to be, debated at length in ongoing philosophy of science discourses. Readers interested primarily in the practical challenges of QtPR might want to skip this section. Also, readers with a more innate interest in the broader discussion of philosophy of science might want to consult the referenced texts and their cited texts directly.

3.1 A Brief Introduction to Positivism

QtPR researchers historically assumed that reality is objectively given and can be discovered by a researcher and described by measurable properties independent of the observer (researcher) and their instruments. This worldview is generally called positivism.

At the heart of positivism is Karl Popper’s dichotomous differentiation between “scientific” theories and “myth.” A scientific theory is a theory whose predictions can be empirically falsified, that is, shown to be wrong. Therefore, a scientific theory is by necessity a risky endeavor, i.e., it may be thrown out if not supported by the data. Einstein’s Theory of Relativity is a prime example, according to Popper, of a scientific theory. When Einstein proposed it, the theory may have ended up in the junk pile of history had its empirical tests not supported it, despite the enormous amount of work put into it and despite its mathematical appeal. The reason Einstein’s theory was accepted was because it was put to the test: Eddington’s eclipse observation in 1919 confirmed its predictions, predictions that were in contrast to what should have been seen according to Newtonian physics. Eddington’s eclipse observation was a make-or-break event for Einstein’s theory. The theory would have been discredited had the stars not appeared to move during the eclipse because of the Sun’s gravity. In contrast, according to Popper, is Freud’s theory of psychoanalysis which can never be disproven because the theory is sufficiently imprecise to allow for convenient “explanations” and the addition of ad hoc hypotheses to explain observations that contradict the theory. The ability to explain any observation as an apparent verification of psychoanalysis is no proof of the theory because it can never be proven wrong to those who believe in it. A scientific theory, in contrast to psychoanalysis, is one that can be empirically falsified. This is the Falsification Principle and the core of positivism. Basically, experience can show theories to be wrong, but can never prove them right. It is an underlying principle that theories can never be shown to be correct.

This demarcation of science from the myths of non-science also assumes that building a theory based on observation (through induction) does not make it scientific. Science, according to positivism, is about solving problems by unearthing truth. It is not about fitting theory to observations. That is why pure philosophical introspection is not really science either in the positivist view. Induction and introspection are important, but only as a highway toward creating a scientific theory. Central to understanding this principle is the recognition that there is no such thing as a pure observation. Every observation is based on some preexisting theory or understanding.

Furthermore, it is almost always possible to choose and select data that will support almost any theory if the researcher just looks for confirming examples. Accordingly, scientific theory, in the traditional positivist view, is about trying to falsify the predictions of the theory.

In theory, it is enough, in Popper’s way of thinking, for one observation that contradicts the prediction of a theory to falsify it and render it incorrect. Furthermore, even after being tested, a scientific theory is never verified because it can never be shown to be true, as some future observation may yet contradict it. Accordingly, a scientific theory is, at most, extensively corroborated, which can render it socially acceptable until proven otherwise. Of course, in reality, measurement is never perfect and is always based on theory. Hence, positivism differentiates between falsification as a principle, where one negating observation is all that is needed to cast out a theory, and its application in academic practice, where it is recognized that observations may themselves be erroneous and hence where more than one observation is usually needed to falsify a theory.

This notion that scientists can forgive instances of disproof as long as the bulk of the evidence still corroborates the base theory lies behind the general philosophical thinking of Imre Lakatos (1970). In Lakatos’ view, theories have a “hard core” of ideas, but are surrounded by evolving and changing supplemental collections of both hypotheses, methods, and tests – the “protective belt.” In this sense, his notion of theory was thus much more fungible than that of Popper.

In QtPR practice since World War II, moreover, social scientists have tended to seek out confirmation of a theoretical position rather than its disconfirmation, a la Popper. This is reflected in their dominant preference to describe not the null hypothesis of no effect but rather alternative hypotheses that posit certain associations or directions in sign. In other words, QtPR researchers are generally inclined to hypothesize that a certain set of antecedents predicts one or more outcomes, co-varying either positively or negatively. It needs to be noted that positing null hypotheses of no effect remains a convention in some disciplines; but generally speaking, QtPR practice favors stipulating certain directional effects and certain signs, expressed in hypotheses (Edwards & Berry, 2010). Overall, modern social scientists favor theorizing models with expressed causal linkages and predictions of correlational signs. Popper’s contribution to thought – specifically, that theories should be falsifiable – is still held in high esteem, but modern scientists are more skeptical that one conflicting case can disprove a whole theory, at least when gauged by which scholarly practices seem to be most prevalent.

3.2 From Positivism to Post-Positivism

We already noted above that “quantitative, positivist research” is really a shorthand for “quantitative, post-positivist research.” Whereas qualitative researchers sometimes take ownership of the concept of post-positivism, there is actually little quarrel among modern quantitative social scientists over the extent to which we can treat the realities of the world as somehow and truly “objective.” A brief history of the intellectual thought behind this may explain what is meant by this statement.

Flourishing for a brief period in the early 1900s, logical positivism, which argued that all natural laws could be reduced to the mathematics of logic, was one culmination of a deterministic positivism, but these ideas came out of a long tradition of thinking of the world as an objective reality best described by philosophical determinism. One could trace this lineage all the way back to Aristotle and his opposition to the “metaphysical” thought of Plato, who believed that the world as we see it has an underlying reality (forms) that cannot be objectively measured or determined. The only way to “see” that world, for Plato and Socrates, was to reason about it; hence, Plato’s philosophical dialecticism.

During more modern times, Henri de Saint-Simon (1760–1825), Pierre-Simon Laplace (1749–1827), Auguste Comte (1798–1857), and Émile Durkheim (1858–1917) were among a large group of intellectuals whose basic thinking was along the lines that science could uncover the “truths” of a difficult-to-see reality that is offered to us by the natural world. Science achieved this through the scientific method and through empiricism, which depended on measures that could pierce the veil of reality. With the advent of experimentalism especially in the 19th century and the discovery of many natural, physical elements (like hydrogen and oxygen) and natural properties like the speed of light, scientists came to believe that all natural laws could be explained deterministically, that is, at the 100% explained variance level. However, in 1927, German scientist Werner Heisenberg struck down this kind of thinking with his discovery of the uncertainty principle. This discovery, basically uncontended to this day, found that the underlying laws of nature (in Heisenberg’s case, the movement and position of atomic particles), were not perfectly predictable, that is to say, deterministic. They are stochastic. Ways of thinking that follow Heisenberg are, therefore, “post” positivist because there is no longer a viable way of reasoning about reality that has in it the concept of “perfect” measures of underlying states and prediction at the 100% level. These states can be individual socio-psychological states or collective states, such as those at the organizational or national level.

To illustrate this point, consider an example that shows why archival data can never be considered to be completely objective. Even the bottom line of financial statements is structured by human thinking. What is to be included in “revenues,” for example, is impacted by decisions about whether booked revenues can or should be coded as current period revenues. Accounting principles try to control this, but, as cases like Enron demonstrate, it is possible for reported revenues or earnings to be manipulated. In effect, researchers often need to make the assumption that the books, as audited, are accurate reflections of the firm’s financial health. Researchers who are permitted access to transactional data from, say, a firm like Amazon, are assuming, moreover, that the data they have been given is accurate, complete, and representative of a targeted population. But is it? Intermediaries may have decided on their own not to pull all the data the researcher requested, but only a subset. Their selection rules may then not be conveyed to the researcher who blithely assumes that their request had been fully honored. Finally, governmental data is certainly subject to imperfections, lower quality data that the researcher is her/himself unaware of. Adjustments to government unemployment data, for one small case, are made after the fact of the original reporting. Are these adjustments more or less accurate than the original figures? In the vast majority of cases, researchers are not privy to the process so that they could reasonably assess this. We might say that archival data might be “reasonably objective,” but it is not purely ”objective” By any stretch of the imagination. There is no such thing. All measures in social sciences, thus, are social constructions that can only approximate a true, underlying reality.

Our development and assessment of measures and measurements (Section 5) is another simple reflection of this line of thought. Within statistical bounds, a set of measures can be validated and thus considered to be acceptable for further empiricism. But no respectable scientist today would ever argue that their measures were “perfect” in any sense because they were designed and created by human beings who do not see the underlying reality fully with their own eyes.

How does this ultimately play out in modern social science methodologies? The emphasis in social science empiricism is on a statistical understanding of phenomena since, it is believed, we cannot perfectly predict behaviors or events. One major articulation of this was in Cook and Campbell’s seminal book Quasi-Experimentation (1979), later revised together with William Shadish (2001). In their book, they explain that deterministic prediction is not feasible and that there is a boundary of critical realism that scientists cannot go beyond.

Our argument, hence, is that IS researchers who work with quantitative data are not truly positivists, in the historical sense. We are all post-positivists. We can know things statistically, but not deterministically. While the positivist epistemology deals only with observed and measured knowledge, the post-positivist epistemology recognizes that such an approach would result in making many important aspects of psychology irrelevant because feelings and perceptions cannot be readily measured. In post-positivist understanding, pure empiricism, i.e., deriving knowledge only through observation and measurement, is understood to be too demanding. Instead, post-positivism is based on the concept of critical realism, that there is a real world out there independent of our perception of it and that the objective of science is to try and understand it, combined with triangulation, i.e., the recognition that observations and measurements are inherently imperfect and hence the need to measure phenomena in many ways and compare results. This post-positivist epistemology regards the acquisition of knowledge as a process that is more than mere deduction. Knowledge is acquired through both deduction and induction.

3.3 QtPR and Null Hypothesis Significance Testing

QtPR has historically relied on null hypothesis significance testing (NHST), a technique of statistical inference by which a hypothesized value (such as a specific value of a mean, a difference between means, correlations, ratios, variances, or other statistics) is tested against a hypothesis of no effect or relationship on basis of empirical observations (Pernet, 2016). With the caveat offered above that in scholarly praxis, null hypotheses are tested today only in certain disciplines, the underlying testing principles of NHST remain the dominant statistical approach in science today (Gigerenzer, 2004).

NHST originated from a debate that mainly took place in the first half of the 20th century between Fisher (e.g., 1935a, 1935b; 1955) on the one hand, and Neyman and Pearson (e.g., 1928, 1933) on the other hand. Fisher introduced the idea of significance testing involving the probability p to quantify the chance of a certain event or state occurring, while Neyman and Pearson introduced the idea of accepting a hypothesis based on critical rejection regions. Fisher’s idea is essentially an approach based on proof by contradiction (Christensen, 2005; Pernet, 2016): we pose a null model and test if our data conforms to it. This computation yields the probability of observing a result at least as extreme as a test statistic (e.g., a t value), assuming the null hypothesis of the null model (no effect) being true. This probability reflects the conditional, cumulative probability of achieving the observed outcome or larger: probability (Observation ≥ t | H0). Neyman and Pearson’s idea was a framework of two hypotheses: the null hypothesis of no effect and the alternative hypothesis of an effect, together with controlling the probabilities of making errors. This idea introduced the notions of control of error rates, and of critical intervals. Together, these notions allow distinguishing Type I (rejecting H0 when there is no effect) and Type II errors (not rejecting H0 when there is an effect).

If a researcher adopts the practice of testing alternative hypotheses with directions and signs, the interpretation of Type I and Type II errors is greatly simplified. From this standpoint, a Type I error occurs when a researcher finds a statistical effect in the tested sample, but, in the population, no such effect would have been found. A Type II error occurs when a researcher infers that there is no effect in the tested sample (i.e., the inference that the test statistic differs statistically significantly from the threshold), when, in fact, such an effect would have been found in the population. Regarding Type I errors, researchers are typically reporting p-values that are compared against an alpha protection level. The alpha protection levels are often set at .05 or lower, meaning that the researcher has at most only a 5% risk of being wrong and subject to a Type I error. Regarding Type II errors, it is important that researchers be able to report a beta statistic, which is the probability that they are correct and free of a Type II error. The standard value for betas has historically been set at .80 (Cohen 1988). This value means that researchers assume a 20% risk (1.0 – .80) that they are correct in their inference.

QtPR scholars sometime wonder why the thresholds for protection against Type I and Type II errors are so divergent. Consider that with alternative hypothesis testing, the researcher is arguing that a change in practice would be desirable (that is, a direction/sign is being proposed). If the inference is that this is true, then there needs to be smaller risk (at or below 5%) since a change in behavior is being advocated and this advocacy of change can be nontrivial for individuals and organizations. On the other hand, if no effect is found, then the researcher is inferring that there is no need to change current practices. Since no change in the status quo is being promoted, scholars are granted a larger latitude to make a mistake in whether this inference can be generalized to the population. However, one should remember that the .05 and .20 thresholds are no more than an agreed-upon convention. The p-value below .05 is there because when Mr. Pearson (of the Pearson correlation) was asked what he thought an appropriate threshold should be, and he said one in twenty would be reasonable. It is out of tradition and reverence to Mr. Pearson that it remains so.

One other caveat is that the alpha protection level can vary. Alpha levels in medicine are generally lower (and the beta level set higher) since the implications of Type I or Type II errors can be severe given that we are talking about human health. The convention is thus that we do not want to recommend that new medicines be taken unless there is a substantial and strong reason to believe that this can be generalized to the population (a low alpha). Likewise, with the beta: Clinical trials require fairly large numbers of subjects and so the effect of large samples makes it highly unlikely that what we infer from the sample will not readily generalize to the population.

As this discussion already illustrates, it is important to realize that applying NHST is difficult. Several threats are associated with the use of NHST in QtPR. These are discussed in some detail by Mertens and Recker (2020). Below we summarize some of the most imminent threats that QtPR scholars should be aware of in QtPR practice:

1. NHST is difficult to interpret. The p-value is not an indication of the strength or magnitude of an effect (Haller & Kraus, 2002). Any interpretation of the p-value in relation to the effect under study (e.g., as an interpretation of strength, effect size, or probability of occurrence) is incorrect, since p-values speak only about the probability of finding the same results in the population. In addition, while p-values are randomly distributed (if all the assumptions of the test are met) when there is no effect, their distribution depends on both the population effect size and the number of participants, making it impossible to infer the strength of an effect.

When the sample size n is relatively small but the p-value relatively low, that is, less than what the current conventional a-priori alpha protection level states, the effect size is also likely to be sizeable. However, this is a happenstance of the statistical formulas being used and not a useful interpretation in its own right. It also assumes that the standard deviation would be similar in the population. This is why p-values are not reliably about effect size.

In contrast, correlations are about the effect of one set of variables on another. Squaring the correlation r gives the R2, referred to as the explained variance. Explained variance describes the percent of the total variance (as the sum of squares of the residuals if one were to assume that the best predictor of the expected value of the dependent variable is its average) that is explained by the model variance (as the sum of squares of the residuals if one were to assume that the best predictor of the expected value of the dependent variable is the regression formula). Hence, r values are all about correlational effects whereas p-values are all about sampling (see below).

Similarly, 1-p is not the probability of replicating an effect (Cohen, 1994). Often, a small p-value is considered to indicate a strong likelihood of getting the same results on another try, but again this cannot be obtained because the p-value is not definitely informative about the effect itself (Miller, 2009). This reasoning hinges on power among other things. The power of a study is a measure of the probability of avoiding a Type II error. Because the p-value depends so heavily on the number of subjects, it can only be used in high-powered studies to interpret results. In low powered studies, the p-value may have too large a variance across repeated samples. The higher the statistical power of a test, the lower the risk of making a Type II error. Low power thus means that a statistical test only has a small chance of detecting a true effect or that the results are likely to be distorted by random and systematic error.

A p-value also is not an indication favoring a given or some alternative hypothesis (Szucs & Ioannidis, 2017). Because a low p-value only indicates a misfit of the null hypothesis to the data, it cannot be taken as evidence in favor of a specific alternative hypothesis more than any other possible alternatives such as measurement error and selection bias (Gelman, 2013).

The p-value also does not describe the probability of the null hypothesis p(H0) being true (Schwab et al., 2011). This common misconception arises from a confusion between the probability of an observation given the null probability (Observation ≥ t | H0) and the probability of the null given an observation probability (H0 | Observation ≥ t) that is then taken as an indication for p(H0).

In interpreting what the p-value means, it is therefore important to differentiate between the mathematical expression of the formula and its philosophical application. Mathematically, what we are doing in statistics, for example in a t-test, is to estimate the probability of obtaining the observed result or anything more extreme in the available sample data than that was actually observed, assuming that (1) the null hypothesis holds true in the population and (2) all underlying model and test assumptions are met (McShane & Gal, 2017). Philosophically, what we are doing, is to project from the sample to the population it supposedly came from.

This distinction is important. When we compare two means(or in other tests standard deviations or ratios etc.), there is no doubt mathematically that if the two means in the sample are not exactly the same number, then they are different. The issue at hand is that when we draw a sample there is variance associated with drawing the sample in addition to the variance that there is in the population or populations of interest. Philosophically what we are addressing in these statistical tests is whether the difference that we see in the statistics of interest, such as the means, is large enough in the sample or samples that we feel confident in saying that there probably is a difference also in the population or populations that the sample or samples came from. For example, experimental studies are based on the assumption that the sample was created through random sampling and is reasonably large. Only then, based on the law of large numbers and the central limit theorem can we upheld (a) a normal distribution assumption of the sample around its mean and (b) the assumption that the mean of the sample approximates the mean of the population (Miller & Miller 2012). Obtaining such a standard might be hard at times in experiments but even more so in other forms of QtPR research; however, researchers should at least acknowledge it as a limitation if they do not actually test it, by using, for example, a Kolmogorov-Smirnoff test of the normality of the data or an Anderson-Darling test (Corder & Foreman, 2014).

2. NHST is highly sensitive to sampling strategy. As noted above, the logic of NHST demands a large and random sample because results from statistical analyses conducted on a sample are used to draw conclusions about the population, and only when the sample is large and random can its distribution assumed to be a normal distribution. If samples are not drawn independently, or are not selected randomly, or are not selected to represent the population precisely, then the conclusions drawn from NHST are thrown into question because it is impossible to correct for unknown sampling bias.

3. The Effect of Big Data on Hypothesis Testing. With a large enough sample size, a statistically significant rejection of a null hypothesis can be highly probable even if an underlying discrepancy in the examined statistics (e.g., the differences in means) is substantively trivial. Sample size sensitivity occurs in NHST with so-called point-null hypotheses (Edwards & Berry, 2010), i.e., predictions expressed as point values. A researcher that gathers a large enough sample can reject basically any point-null hypothesis because the confidence interval around the null effect often becomes very small with a very large sample (Lin et al., 2013; Guo et al., 2014). But even more so, in an world of big data, p-value testing alone and in a traditional sense is becoming less meaningful because large samples can rule out even the small likelihood of either Type I or Type II errors (Guo et al., 2014). It is entirely possible to have statistically significant results with only very marginal effect sizes (Lin et al., 2013). As Guo et al. (2014) point out, even extremely weak effects of r = .005 become statistically significant at some level of N and in the case of regression with two IVs, this result becomes statistically significant for all levels of effect size at a N of only 500.

The practical implication is that when researchers are working with big data, they need not be concerned that they will get significant effects, but why all of their hypotheses are not significant. If at an N of 15,000 (see Guo et al., 2014, p. 243), the only reason why weak t-values in all models are not supported is that there is likely a problem with the data itself. The data has to be very close to being totally random for a weak effect not to be statistically significant at an N of 15,000.

4. NHST logic is incomplete. NHST rests on the formulation of a null hypothesis and its test against a particular set of data. This tactic relies on the so-called modus tollens (denying the consequence) (Cohen, 1994) – a much used logic in both positivist and interpretive research in IS (Lee & Hubona, 2009). While modus tollens is logically correct, problems in its application can still arise. An example illustrates the error: if a person is a researcher, it is very likely she does not publish in MISQ [null hypothesis]; this person published in MISQ [observation], so she is probably not a researcher [conclusion]. This logic is, evidently, flawed. In other words, the logic that allows for the falsification of a theory loses its validity when uncertainty and/or assumed probabilities are included in the premises. And, yet both uncertainty (e.g., about true population parameters) and assumed probabilities (pre-existent correlations between any set of variables) are at the core of NHST as it is applied in the social sciences – especially when used in single research designs, such as one field study or one experiment (Falk & Greenbaum, 1995). That is, in social reality, no two variables are ever perfectly unrelated (Meehl, 1967).

Section 4: Fundamentals of QtPR

4.1 The Importance of Measurement

Because of its focus on quantities that are collected to measure the state of variable(s) in real-world domains, QtPR depends heavily on exact measurement. This is because measurement provides the fundamental connection between empirical observation and the theoretical and mathematical expression of quantitative relationships. It is also vital because many constructs of interest to IS researchers are latent, meaning that they exist but not in an immediately evident or readily tangible way. Appropriate measurement is, very simply, the most important thing that a quantitative researcher must do to ensure that the results of a study can be trusted.

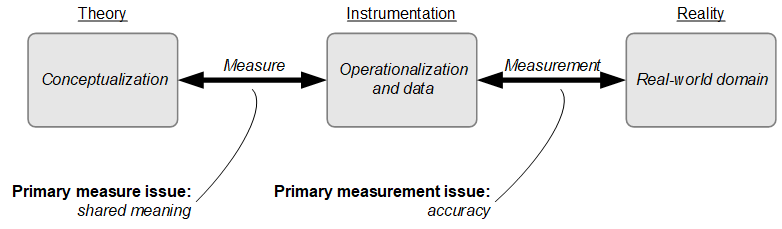

Figure 2 describes in simplified form the QtPR measurement process, based on the work of Burton-Jones and Lee (2017). Typically, QtPR starts with developing a theory that offers a hopefully insightful and novel conceptualization of some important real-world phenomena. In attempting to falsify the theory or to collect evidence in support of that theory, operationalizations in the form of measures (individual variables or statement variables) are needed and data needs to be collected from empirical referents (phenomena in the real world that the measure supposedly refers to). Figure 2 also points to two key challenges in QtPR. Moving from the left (theory) to the middle (instrumentation), the first issue is that of shared meaning. If researchers fail to ensure shared meaning between their socially constructed theoretical constructs and their operationalizations through measures they define, an inherent limit will be placed on their ability to measure empirically the constructs about which they theorized. Taking steps to obtain accurate measurements (the connection between real-world domain and the concepts’ operationalization through a measure) can reduce the likelihood of problems on the right side of Figure 2, affecting the data (accuracy of measurement). However, even if complete accuracy were obtained, the measurements would still not reflect the construct theorized because of the lack of shared meaning. As a simple example, consider the scenario that your research is about individuals’ affections when working with information technology and the behavioral consequences of such affections. An issue of shared meaning could occur if, for instance, you are attempting to measure “compassion.” How do you know that you are measuring “compassion” and not, say, “empathy”, which is a socially constructed concept that to many has a similar meaning?

Likewise, problems manifest if accuracy of measurement is not assured. No matter through which sophisticated ways researchers explore and analyze their data, they cannot have faith that their conclusions are valid (and thus reflect reality) unless they can accurately demonstrate the faithfulness of their data.

Understanding and addressing these challenges are important, independent from whether the research is about confirmation or exploration. In research concerned with confirmation, problems accumulate from the left to the right of Figure 2: If researchers fail to ensure shared meaning between their theoretical constructs and operationalizations, this restricts their ability to measure faithfully the constructs they theorized. In research concerned with exploration, problems tend to accumulate from the right to the left of Figure 2: No matter how well or systematically researchers explore their data, they cannot guarantee that their conclusions reflect reality unless they first take steps to ensure the accuracy of their data.

To avoid these problems, two key requirements must be met to avoid problems of shared meaning and accuracy and to ensure high quality of measurement:

- The variables that are chosen as operationalizations to measure a theoretical construct must share its meaning (in all its complexity if needed). This step concerns the validity of the measures.

- The variables that are chosen as operationalizations must also guarantee that data can be collected from the selected empirical referents accurately (i.e., consistently and precisely). This step concerns the reliability of measurement.

Together, validity and reliability are the benchmarks against which the adequacy and accuracy (and ultimately the quality) of QtPR are evaluated. To assist researchers, useful Respositories of measurement scales are available online. See for example: https://en.wikibooks.org/wiki/Handbook_of_Management_Scales.

4.2 Validity

Validity describes whether the operationalizations and the collected data share the true meaning of the constructs that the researchers set out to measure. Valid measures represent the essence or content upon which the construct is focused. For instance, recall the challenge of measuring “compassion”: A question of validity is to demonstrate that measurements are focusing on compassion and not on empathy or other related constructs.

There are different types of validity that are important to identify. Some of them relate to the issue of shared meaning and others to the issue of accuracy. In turn, there are theoretical assessments of validity (for example, for content validity,), which assess how well an operationalized measure fits the conceptual definition of the relevant theoretical construct; and empirical assessments of validity (for example, for convergent and discriminant validity), which assess how well collected measurements behave in relation to the theoretical expectations. Note that both theoretical and empirical assessments of validity are key to ensuring validity of study results.

Content validity in our understanding refers to the extent to which a researcher’s conceptualization of a construct is reflected in her operationalization of it, that is, how well a set of measures match with and capture the relevant content domain of a theoretical construct (Cronbach, 1971). But as with many other concepts, one should note that other characterizations of content validity also exist (e.g., Rossiter, 2011).

The key question of content validity in our understanding is whether the instrumentation (questionnaire items, for example) pulls in a representative manner all of the ways that could be used to measure the content of a given construct (Straub et al., 2004). Content validity is important because researchers have many choices in creating means of measuring a construct. Did they choose wisely so that the measures they use capture the essence of the construct? They could, of course, err on the side of inclusion or exclusion. If they include measures that do not represent the construct well, measurement error results. If they omit measures, the error is one of exclusion. Suppose you included “satisfaction with the IS staff” in your measurement of a construct called User Information Satisfaction but you forgot to include “satisfaction with the system” itself? Other researchers might feel that you did not draw well from all of the possible measures of the User Information Satisfaction construct. They could legitimately argue that your content validity was not the best. Assessments may include an expert panel that peruse a rating scheme and/or a qualitative assessment technique such as the Q-sort method (Block, 1961).

Construct validity is an issue of operationalization and measurement between constructs. With construct validity, we are interested in whether the instrumentation allows researchers to truly capture measurements for constructs in a way that is not subject to common methods bias and other forms of bias. For example, construct validity issues occur when some of the questionnaire items, the verbiage in the interview script, or the task descriptions in an experiment are ambiguous and are giving the participants the impression that they mean something different from what was intended.

Problems with construct validity occur in three major ways. Items or phrases in the instrumentation are not related in the way they should be, or they are related in the ways they should not be. If items do not converge, i.e., measurements collected with them behave statistically different from one another, it is called a convergent validity problem. If they do not segregate or differ from each other as they should, then it is called a discriminant validity problem.

Nomological validity assesses whether measurements and data about different constructs correlate in a way that matches how previous literature predicted the causal (or nomological) relationships of the underlying theoretical constructs. So, essentially, we are testing whether our obtained data fits previously established causal models of the phenomenon including prior suggested classifications of constructs (e.g., as independent, dependent, mediating, or moderating). If there are clear similarities, then the instrument items can be assumed to be reasonable, at least in terms of their nomological validity.

There are numerous ways to assess construct validity (Straub, Boudreau, and Gefen, 2004; Gefen, Straub, and Boudreau, 2000; Straub, 1989). Typically, researchers use statistical, correlational logic, that is, they attempt to establish empirically that items that are meant to measure the same constructs have similar scores (convergent validity) whilst also being dissimilar to scores of measures that are meant to measure other constructs (discriminant validity) This is usually done by comparing item correlations and looking for high correlations between items of one construct and low correlations between those items and items associated with other constructs. Other tests include factor analysis (a latent variable modeling approach) or principal component analysis (a composite-based analysis approach), both of which are tests to assess whether items load appropriately on constructs represented through a mathematically latent variable (a higher order factor). In this context, loading refers to the correlation coefficient between each measurement item and its latent factor. If items load appropriately high (viz., above 0.7), we assume that they reflect the theoretical constructs. Tests of nomological validity typically involve comparing relationships between constructs in a “network” of theoretical constructs with theoretical networks of constructs previously established in the literature and which may involve multiple antecedent, mediator, and outcome variables. The idea is to test a measurement model established given newly collected data against theoretically-derived constructs that have been measured with validated instruments and tested against a variety of persons, settings, times, and, in the case of IS research, technologies, in order to make the argument more compelling that the constructs themselves are valid (Straub et al. 2004). Often, such tests can be performed through structural equation modelling or moderated mediation models.

Internal validity assesses whether alternative explanations of the dependent variable(s) exist that need to be ruled out (Straub, 1989). It differs from construct validity, in that it focuses on alternative explanations of the strength of links between constructs whereas construct validity focuses on the measurement of individual constructs. Shadish et al. (2001) distinguish three factors of internal validity, these being (1) temporal precedence of IVs before DVs; (2) covariation; and (3) the ability to show the predictability of the current model variables over other, missing variables (“ruling out rival hypotheses”).

Challenges to internal validity in econometric and other QtPR studies are frequently raised using the rubric of “endogeneity concerns.” Endogeneity is an important issue because issues such as omitted variables, omitted selection, simultaneity, common-method variance, and measurement error all effectively render statistically estimates causally uninterpretable (Antonakis et al., 2010). Statistically, the endogeneity problem occurs when model variables are highly correlated with error terms. From a practical standpoint, this almost always happens when important variables are missing from the model. Hence, the challenge is what Shadish et al. (2001) are referring to in their third criterion: How can we show we have reasonable internal validity and that there are not key variables missing from our models?

Historically, internal validity was established through the use of statistical control variables. (Note that this is an entirely different concept from the term “control” used in an experiment where it means that one or more groups have not gotten an experimental treatment; to differentiate it from controls used to discount other explanations of the DV, we can call these “experimental controls.”) Statistical control variables are added to models to demonstrate that there is little-to-no explained variance associated with the designated statistical controls. Typical examples of statistical control variables in many QtPR IS studies are measurements of the size of firm, type of industry, type of product, previous experience of the respondents with systems, and so forth. Other endogeneity tests of note include the Durbin-Wu-Hausman (DWH) test and various alternative tests commonly carried out in econometric studies (Davidson and MacKinnon, 1993). If the DWH test indicates that there may be endogeneity, then the researchers can use what are called “instrumental variables” to see if there are indeed missing variables in the model. An overview of endogeneity concerns and ways to address endogeneity issues through methods such as fixed-effects panels, sample selection, instrumental variables, regression discontinuity, and difference-in-differences models, is given by Antonakis et al. (2010). More discussion on how to test endogeneity is available in Greene (2012).

Manipulation validity is used in experiments to assess whether an experimental group (but not the control group) is faithfully manipulated – and we can thus reasonably trust that any observed group differences are in fact attributable to the experimental manipulation. This form of validity is discussed in greater detail, including stats for assessing it, in Straub, Boudreau, and Gefen (2004). Suffice it to say at this point that in experiments, it is critical that the subjects are manipulated by the treatments and, conversely, that the control group is not manipulated. Checking for manipulation validity differs by the type and the focus of the experiment, and its manipulation and experimental setting. In some (nut not all) experimental studies, one way to check for manipulation validity is to ask subjects, provided they are capable of post-experimental introspection: Those who were aware that they were manipulated are testable subjects (rather than noise in the equations). In fact, those who were not aware, depending on the nature of the treatments, may be responding as if they were assigned to the control group.

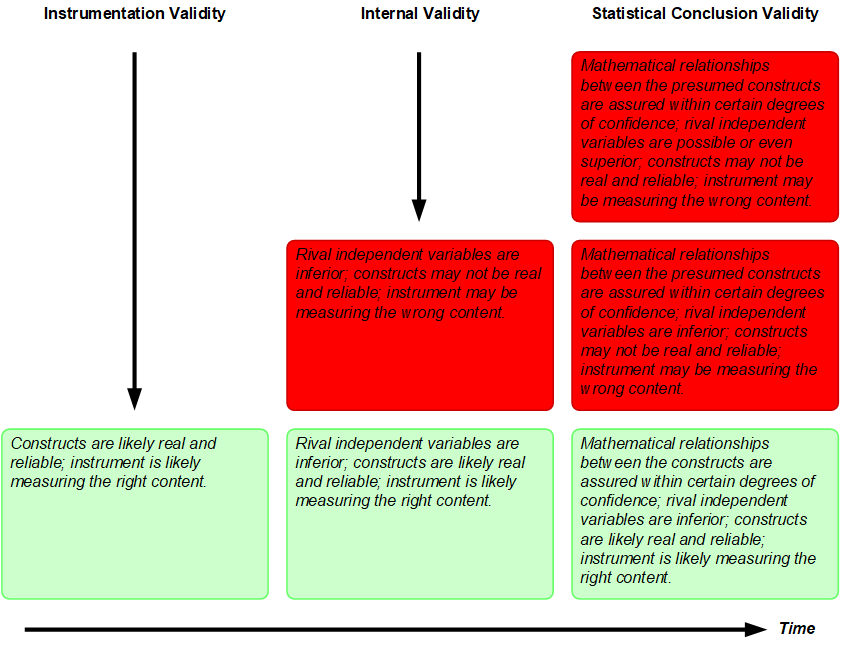

In closing, we note that the literature also mentions other categories of validity. For example, statistical conclusion validity tests the inference that the dependent variable covaries with the independent variable, as well as that of any inferences regarding the degree of their covariation (Shadish et al., 2001). Type I and Type II errors are classic violations of statistical conclusion validity (Garcia-Pérez, 2012; Shadish et al., 2001). Predictive validity (Cronbach & Meehl, 1955) assesses the extent to which a measure successfully predicts a future outcome that is expected and practically meaningful. Finally, ecological validity (Shadish et al., 2001) assesses the ability to generalize study findings from an experimental setting to a set of real-world settings. High ecological validity means researchers can generalize the findings of their research study to real-life settings. We note that at other times, we have discussed ecological validity as a form of external validity (Im & Straub, 2015).

4.3 Reliability

Reliability describes the extent to which a measurement variable or set of variables is consistent in what it is intended to measure across multiple applications of measurements (e.g., repeated measurements or concurrently through alternative measures). If multiple measurements are taken, reliable measurements should all be consistent in their values.

Reliability is important to the scientific principle of replicability because reliability implies that the operations of a study can be repeated in equal settings with the same results. Consider the example of weighing a person. An unreliable way of measuring weight would be to ask onlookers to guess a person’s weight. Most likely, researchers will receive different answers from different persons (and perhaps even different answers from the same person if asked repeatedly). A more reliable way, therefore, would be to use a scale. Unless the person’s weight actually changes in the times between stepping repeatedly on to the scale, the scale should consistently, within measurement error, give you the same results. Note, however, that a mis-calibrated scale could still give consistent (but inaccurate) results. This example shows how reliability ensures consistency but not necessarily accuracy of measurement. Reliability does not guarantee validity.

Sources of reliability problems often stem from a reliance on overly subjective observations and data collections. All types of observations one can make as part of an empirical study inevitably carry subjective bias because we can only observe phenomena in the context of our own history, knowledge, presuppositions, and interpretations at that time. This is why often in QtPR researchers often look to replace observations made by the researcher or other subjects with other, presumably more “objective” data such as publicly verified performance metrics rather than subjectively experienced performance. Other sources of reliability problems stem from poorly specified measurements, such as survey questions that are imprecise or ambiguous, or questions asked of respondents who are either unqualified to answer, unfamiliar with, predisposed to a particular type of answer, or uncomfortable to answer.

Different types of reliability can be distinguished: Internal consistency (Streiner, 2003) is important when dealing with multidimensional constructs. It measures whether several measurement items that propose to measure the same general construct produce similar scores. The most common test is through Cronbach’s (1951) alpha, however, this test is not without problems. One problem with Cronbach alpha is that it assumes equal factor loadings, aka essential tau-equivalence. An alternative to Cronbach alpha that does not assume tau-equivalence is the omega test (Hayes and Coutts, 2020). The omega test has been made available in recent versions of SPSS; it is also available in other statistical software packages. Another problem with Cronbach’s alpha is that a higher alpha can most often be obtained simply by adding more construct items in that alpha is a function of k items. In other words, many of the items may not be highly interchangeable, highly correlated, reflective items (Jarvis et al., 2003), but this will not be obvious to researchers unless they examine the impact of removing items one-by-one from the construct.

Interrater reliability is important when several subjects, researchers, raters, or judges code the same data(Goodwin, 2001). Often, we approximate “objective” data through “inter-subjective” measures in which a range of individuals (multiple study subjects or multiple researchers, for example) all rate the same observation – and we look to get consistent, consensual results. Consider, for example, that you want to score student thesis submissions in terms of originality, rigor, and other criteria. We typically have multiple reviewers of such thesis to approximate an objective grade through inter-subjective rating until we reach an agreement. In scientific, quantitative research, we have several ways to assess interrater reliability. Cohen’s (1960) coefficient Kappa is the most commonly used test. Pearson’s or Spearman correlations, or percentage agreement scores are also used (Goodwin, 2001).

Straub, Boudreau, and Gefen (2004) introduce and discuss a range of additional types of reliability such as unidimensional reliability, composite reliability, split-half reliability, or test-retest reliability. They also list the different tests available to examine reliability in all its forms.

The demonstration of reliable measurements is a fundamental precondition to any QtPR study: Put very simply, the study results will not be trusted (and thus the conclusions foregone) if the measurements are not consistent and reliable. And because even the most careful wording of questions in a survey, or the reliance on non-subjective data in data collection does not guarantee that the measurements obtained will indeed be reliable, one precondition of QtPR is that instruments of measurement must always be tested for meeting accepted standards for reliability.

4.4 Developing and Assessing Measures and Measurements

Establishing reliability and validity of measures and measurement is a demanding and resource-intensive task. It is by no means “optional.” Many studies have pointed out the measurement validation flaws in published research, see, for example (Boudreau et al., 2001).

Because developing and assessing measures and measurement is time-consuming and challenging, researchers should first and always identify existing measures and measurements that have already been developed and assessed, to evaluate their potential for reuse. Aside from reducing effort and speeding up the research, the main reason for doing so is that using existing, validated measures ensures comparability of new results to reported results in the literature: analyses can be conducted to compare findings side-by-side. However, critical judgment is important in this process because not all published measurement instruments have in fact been thoroughly developed or validated; moreover, standards and knowledge about measurement instrument development and assessment themselves evolve with time. For example, several historically accepted ways to validate measurements (such as approaches based on average variance extracted, composite reliability, or goodness of fit indices) have later been criticized and eventually displaced by alternative approaches. As an example, Henseler et al. (2015) propose to evaluate heterotrait-monotrait correlation ratios instead of the traditional Fornell-Larcker criterion and the examination of cross-loadings when evaluating discriminant validity of measures.

There are great resources available that help researchers to identify reported and validated measures as well as measurements. For example, the Inter-Nomological Network (INN, https://inn.theorizeit.org/), developed by the Human Behavior Project at the Leeds School of Business, is a tool designed to help scholars to search the available literature for constructs and measurement variables (Larsen & Bong, 2016). Other management variables are listed on a wiki page.

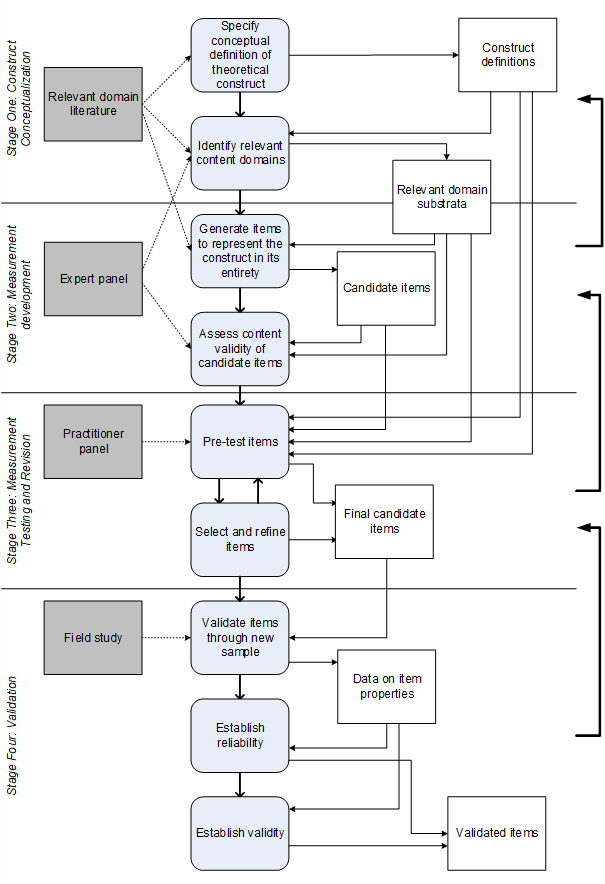

When new measures or measurements need to be developed, the good news is that ample guidelines exist to help with this task. Historically, QtPR scholars in IS research often relied on methodologies for measurement instrument development that build on the work by Churchill in the field of marketing (Churchill, 1979). Figure 3 shows a simplified procedural model for use by QtPR researchers who wish to create new measurement instruments for conceptually defined theory constructs. The procedure shown describes a blend of guidelines available in the literature, most importantly (MacKenzie et al., 2011; Moore & Benbasat, 1991). It incorporates techniques to demonstrate and assess the content validity of measures as well as their reliability and validity. It separates the procedure into four main stages and describes the different tasks to be performed (grey rounded boxes), related inputs and outputs (white rectangles), and the relevant literature or sources of empirical data required to carry out the tasks (dark grey rectangles).

It is important to note that the procedural model as shown in Figure 3 describes this process as iterative and discrete, which is a simplified and idealized model of the actual process. In reality, any of the included stages may need to be performed multiple times and it may be necessary to revert to an earlier stage when the results of a later stage do not meet expectations. Also note that the procedural model in Figure 3 is not concerned with developing theory; rather it applies to the stage of the research where such theory exists and is sought to be empirically tested. In other words, the procedural model described below requires the existence of a well-defined theoretical domain and the existence of well-specified theoretical constructs.

The first stage of the procedural model is construct conceptualization, which is concerned with defining the conceptual content domain of a construct. This task involves identifying and carefully defining what the construct is intended to conceptually represent or capture, discussing how the construct differs from other related constructs that may already exist, and defining any dimensions or domains that are relevant to grasping and clearly defining the conceptual theme or content of the construct it its entirety. MacKenzie et al. (2011) provide several recommendations for how to specify the content domain of a construct appropriately, including defining its domain, entity, and property.

A common problem at this stage is that researchers assume that labelling a construct with a name is equivalent to defining it and specifying its content domains: It is not. As a rule of thumb, each focal construct needs (1) a label, (2) a definition, (3) ideally one or more examples that demonstrate its meaning, and ideally (4) a discussion of related constructs in the literature, and (5) a discussion of the focal construct’s likely nomological net and its position within (e.g., as independent factor, as mediating or moderating factor, or as dependent factor).

The next stage is measurement development, where pools of candidate measurement items are generated for each construct. This task can be carried out through an analysis of the relevant literature or empirically by interviewing experts or conducting focus groups. This stage also involves assessing these candidate items, which is often carried out through expert panels that need to sort, rate, or rank items in relation to one or more content domains of the constructs. There are several good illustrations in the literature to exemplify how this works (e.g., Doll & Torkzadeh, 1998; MacKenzie et al., 2011; Moore & Benbasat, 1991).

The third stage, measurement testing and revision, is concerned with “purification”, and is often a repeated stage where the list of candidate items is iteratively narrowed down to a set of items that are fit for use. As part of that process, each item should be carefully refined to be as accurate and exact as possible. Often, this stage is carried out through pre- or pilot-tests of the measurements, with a sample that is representative of the target research population or else another panel of experts to generate the data needed. Repeating this stage is often important and required because when, for example, measurement items are removed, the entire set of measurement item changes, the result of the overall assessment may change, as well as the statistical properties of individual measurement items remaining in the set.

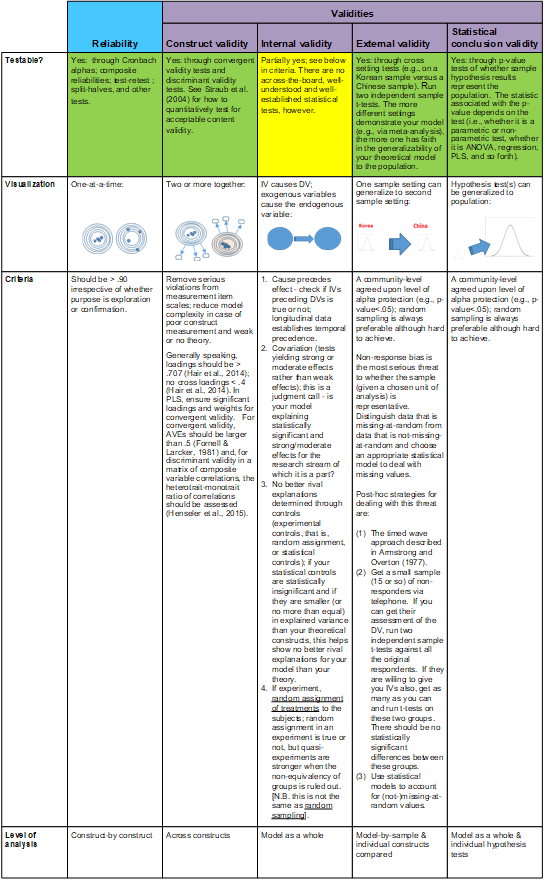

The final stage is validation, which is concerned with obtaining statistical evidence for reliability and validity of the measures and measurements. This task can be fulfilled by performing any field-study QtPR method (such as a survey or experiment) that provides a sufficiently large number of responses from the target population of the respective study. The key point to remember here is that for validation, a new sample of data is required – it should be different from the data used for developing the measurements, and it should be different from the data used to evaluate the hypotheses and theory. Figure 4 summarizes criteria and tests for assessing reliability and validity for measures and measurements. More details on measurement validation are discussed in Section 5 below.

Section 5: The General QtPR Research Approach

5.1 Defining the Purpose of a Study

Initially, a researcher must decide what the purpose of their specific study is: Is it confirmatory or is it exploratory research? Hair et al. (2010) suggest that confirmatory studies are those seeking to test (i.e., estimating and confirming) a prespecified relationship, whereas exploratory studies are those that define possible relationships in only the most general form and then allow multivariate techniques to search for non-zero or “significant” (practically or statistically) relationships. In the latter case, the researcher is not looking to “confirm” any relationships specified prior to the analysis, but instead allows the method and the data to “explore” and then define the nature of the relationships as manifested in the data.

5.2 Distinguishing Methods from Techniques

One of the most common issues in QtPR papers is mistaking data collection for method(s). When authors say their method was a survey, for example, they are telling the readers how they gathered the data, but they are not really telling what their method was. For example, their method could have been some form of an experiment that used a survey questionnaire to gather data before, during, or after the experiment. Or, the questionnaire could have been used in an entirely different method, such as a field study of users of some digital platform.

The same thing can be said about many econometric studies and other studies using archival data or digital trace data from an organization. Saying that the data came from an ecommerce platform or from scraping posts at a website is not a statement about method. It is simply a description of where the data came from.

Therefore, QtPR can involve different techniques for data collection and analysis, just as qualitative research can involve different techniques for data collection (such as focus groups, case study, or interviews) and data analysis (such as content analysis, discourse analysis, or network analysis).

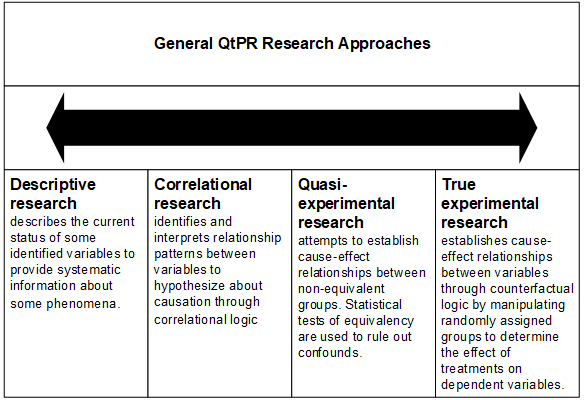

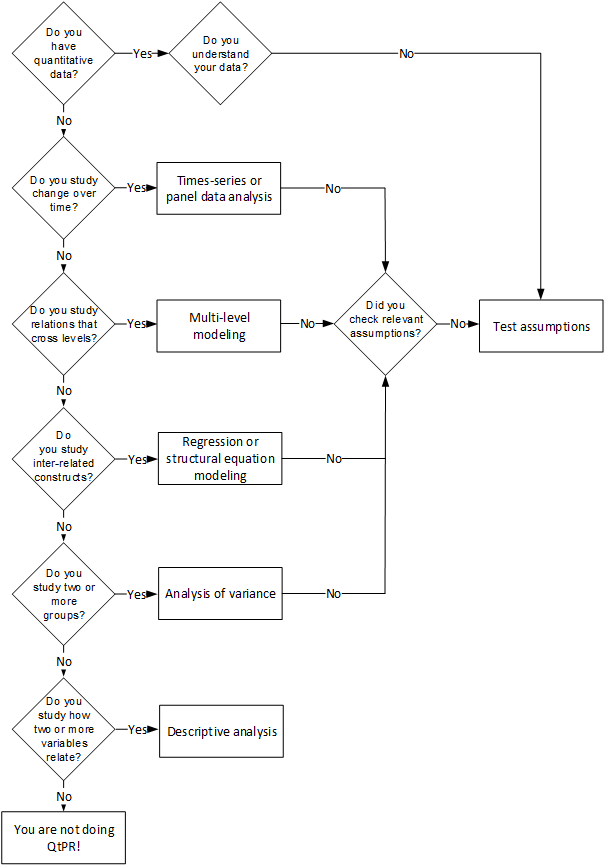

To understand different types of QtPR methods, it is useful to consider how a researcher designs for variable control and randomization in the study. This allows comparing methods according to their validities (Stone, 1981). In this perspective, QtPR methods lie on a continuum from study designs where variables are merely observed but not controlled to study designs where variables are very closely controlled. Likewise, QtPR methods differ in the extent to which randomization is employed during data collection (e.g., during sampling or manipulations). Figure 5 uses these distinctions to introduce a continuum that differentiates four main types of general research approaches to QtPR.

Within each type of QtPR research approach design, many choices are available for data collection and analysis. It should be noted that the choice of a type of QtPR research (e.g., descriptive or experimental) does not strictly “force” a particular data collection or analysis technique. It may, however, influence it, because different techniques for data collection or analysis are more or less well suited to allow or examine variable control; and likewise different techniques for data collection are often associated with different sampling approaches (e.g., non-random versus random). For example, using a survey instrument for data collection does not allow for the same type of control over independent variables as a lab or field experiment. Or, experiments often make it easier for QtPR researchers to use a random sampling strategy in comparison to a field survey. Similarly, the choice of data analysis can vary: For example, covariance structural equation modeling does not allow determining the cause-effect relationship between independent and dependent variables unless temporal precedence is included. Different approaches follow different logical traditions (e.g., correlational versus counterfactual versus configurational) for establishing causation (Antonakis et al., 2010; Morgan & Winship. 2015).

Typically, a researcher will decide for one (or multiple) data collection techniques while considering its overall appropriateness to their research, along with other practical factors, such as: desired and feasible sampling strategy, expected quality of the collected data, estimated costs, predicted nonresponse rates, expected level of measure errors, and length of the data collection period (Lyberg and Kasprzyk, 1991). It is, of course, possible that a given research question may not be satisfactorily studied because specific data collection techniques do not exist to collect the data needed to answer such a question (Kerlinger, 1986).